1. How to make all data explorable

At the beginning of every data analysis is data exploration. A suitable data catalog allows data experts to search all available assets and data structures in seconds. A features and functions are particularly relevant for data exploration.

In this context, a data catalog with a high degree of automation is a key to long-term success! Data must always be kept up to date - and without enormous manual effort. Analysts can also use an automatically synchronized catalog to find out at any time whether the data is up-to-date and thus suitable for the respective question.

In addition to clear documentation and availability of business context, a powerful search is one of the most relevant features. It provides When different user groups use the catalog, diverse filtering and sorting functions are very helpful in practice. Basically, a catalog with its features should always be tailored to the actual user group and not to "ideal" or "desirable" users.

2. What it takes for efficient data analysis

Once data has been successfully found, two steps are essential for successful analysis: quickly developing a good understanding of the data and having the right tools available for analysis.

Develop a good understanding of the data

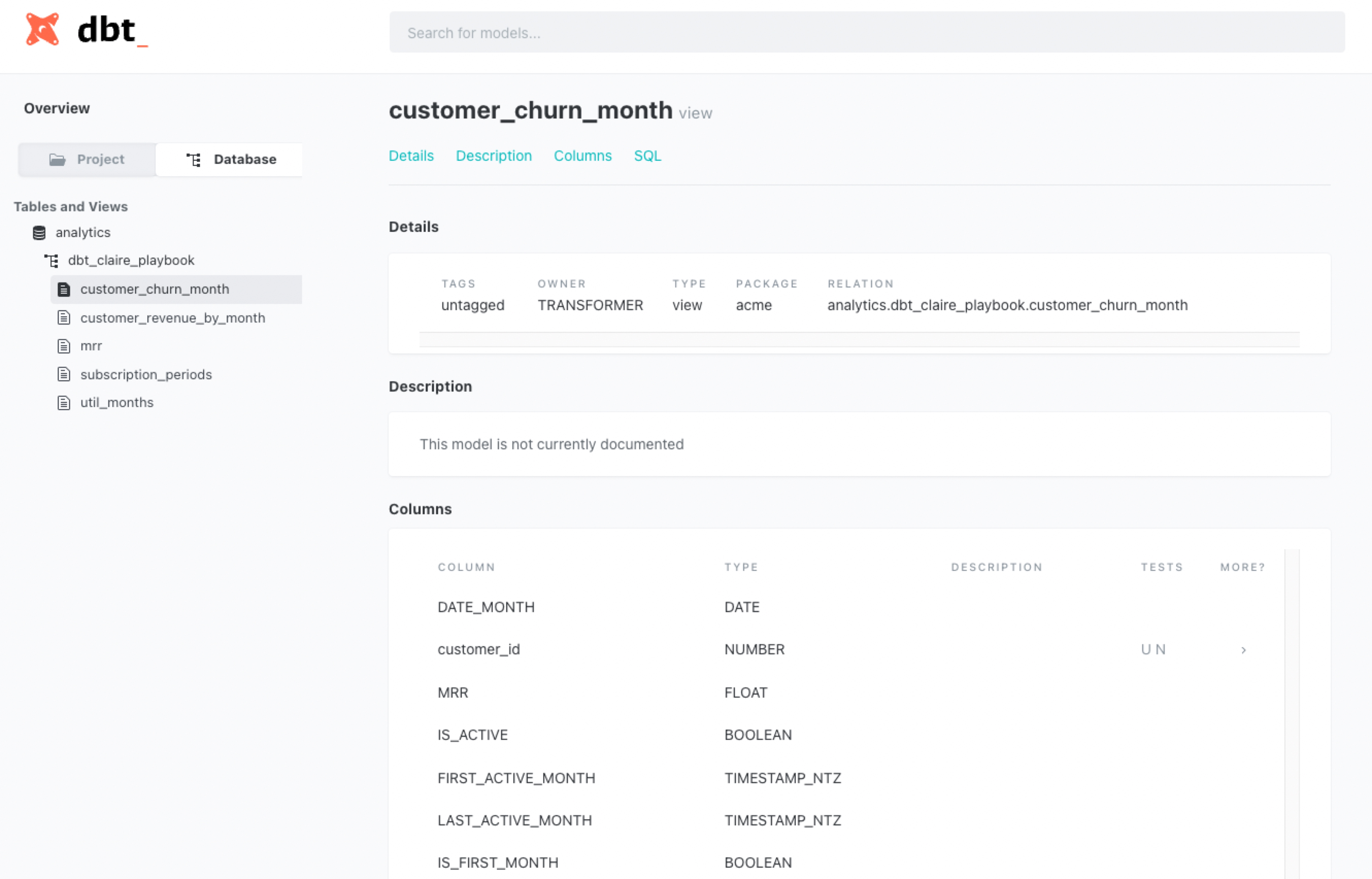

A data catalog with its business glossary and documentation helps to gain a technical understanding. Data profiling tools and functions, on the other hand, allow data experts to quickly gain a good first impression of the data characteristics. Statistics, real-world data distributions and dataset characteristics are useful information in this regard, as are sample values. Here, Great Expectations is an open-source profiling tool that has proven itself in our projects.

The right tools for your use cases

The direct connection of the data source, the data catalog and the application tools (e.g. BI tools) is very helpful for data analysts. With modern tools and cleanly set up APIs, you enable your experts to jump quickly between tools at any time and thus work efficiently. One tip for implementation is to store an overview not only of data but also of available tools in the data catalog.

3. How data can be curated easily

After data for a use case was successfully and quickly identified and analyzed, typical steps of data preparation followed: cleansing, enrichment and combination with other data. An optimal way to further process the given data is offered by SQL. With SQL, data can be flexibly queried from various sources in a variety of settings. However, do not forget data governance at this point! There are various governance tools that support the management of access rules.

In our projects, it was often of central importance for efficient data curation to provide all employees with the most suitable tools for their case (as already recommended under 2.). With the combination of a data catalog and flexible interfaces, flexibility and transparency can be combined in real operations.

.png)

.png)